Speed Prior

AI specialist Jürgen Schmidhuber on inductive inference, universal Solomonoff prior and measuring probability ...

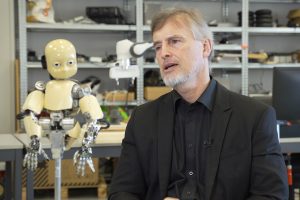

How can we define a robot today? When will we start using driveless cars? What kind of ethics should we build into the machines? Philosopher David Edmonds answers these controversial questions.

This is quite an important topic, because it’s no longer just science fiction. We’ve read about this kinds of machines for decades if not longer. Writers have imagined robots acting like a human beings. And it’s increasingly becoming clear that they are not that far away – intelligent machines. Of course, we have to define what we mean by intelligence.

If a driver crashes a car and a driver gets drunk and causes an injury, we know who to hold responsible. Who we hold responsible when it comes to robots? Do we hold the person who owns the car responsible? Do we hold responsible the person who manufactured the car? Do we hold responsible the insurance company? Do we hold responsible the person who built the software that decided how the car was going to navigate the streets?

There is another famous example in the same field of moral philosophy where the train is running down the track and five people are going to die. And you are standing next to a very fat man and you can push the fat man over the footbridge and the fat man will tumble over and he’ll land on the track and he’ll stop the train from killing the five people. And what’s interesting is that almost nobody thinks that you should push the fat man.

AI specialist Jürgen Schmidhuber on inductive inference, universal Solomonoff prior and measuring probability ...

Philosopher and sociologist of science Steve Fuller on collective and individualistic nature of knowledge, eli...

Computer scientist Erol Gelenbe on the idea of AI self-regulation, the goal function of a network and whether ...