Ethics of Machines

Philosopher David Edmonds on relationship between moral philosophy and robotics, artificial intelligence and e...

This lecture is part of the collaboration between Serious Science and the Technology Contests Up Great READ//ABLE.

Deep learning has revolutionized pattern recognition and machine learning. It is about credit assignment in adaptive systems with long chains of potentially causal links between actions and consequences: actions of a learning system and the consequences of that.

Most of the deep learning is about artificial neural networks. Such neural networks are inspired by the human brain, which has roughly 100 billion little processors called neurons; each is connected to roughly 10,000 other neurons on average. Some of these neurons are input neurons that feed the rest with data, such as video through the cameras, audio through the microphones, pain signals, hunger signals and so on. Other processors are output neurons that control the finger muscles or the speech muscles. Most of the neurons are hidden in between where thinking takes place.

So the learning, or the credit assignment, is about finding weights that make the neural network exhibit some desired behaviour, such as controlling a robot. Depending on the problem and on how the units are connected, such behaviour may require long causal chains of computational stages where each stage is setting the stage for the next processing step, where each stage transforms, often in a non-linear way, the aggregate activation of the entire network.

Deep learning in neural networks is about accurately assigning credit across many such computational stages.

That’s the problem because you run into this credit assignment problem, and the question is, what of the many things that I did in the past have an influence on what is happening now such that I can change the things I did in the past such that next time when I come to a similar case, we can profit from the learned experience?

In a sense, sequence-processing recurrent neural networks, or RNNs, are the ultimate neural networks because they are general computers. Recurrent neural networks can emulate the circuits of a microchip: that’s why it’s a general computer. In fully connected RNNs, all units have connections to all non-input units. Unlike feedforward neural networks, recurrent networks can implement loops, recursion, etc. The program of a recurrent neural network is its weight matrix.

Recurrent neural networks can learn programs that mix sequential and parallel information, sequential through time and parallel information processing, in a natural and efficient way. To measure whether credit assignment in a given neural network application is either deep or shallow type, we consider the length of the corresponding credit assignment paths, which are chains of possibly causal connections between subsequent unit activations in the network, for example, from input units through the hidden units, up to the output units in feedforward neural networks where you don’t have feedback connections, or from the input units, through transformations over time in recurrent neural networks.

Feedforward networks with a fixed topology have a problem-independent maximal problem depth, which is bounded by the number of layers of units. Recurrent neural networks, on the other hand, the deepest of all neural networks, may learn to solve problems of potentially unlimited depth, for example, by learning to store in their activation-based short-term memory representations of certain important previous observations for arbitrary time intervals.

Let me give you an example that shows that even static pattern recognition problems sometimes can greatly profit from recurrency, from recurrent networks.

Normally, if you have an image which you want to classify, you take a feedforward network, and you train it at the output to answer ‘yes, that’s a cow in this image’ or ‘no, there’s no cow’. There is a theorem by Cybenko going back to a basic theorem of Kolmogorov, which shows that a single hidden layer in a neural network is enough to approximate any piecewise continuous mapping with arbitrary degrees of precision. In that sense, it is suggested that for neural networks, you need just one hidden layer, which means the depth of a feedforward network isn’t really important because you can do everything with one layer, although the theorem by Kolmogorov does not explain how many units you should have in this one layer. In practice, however, it turns out that if you have many, many layers, then many, many problems become much easier to solve, and if you have recurrent connections in your networks, then it turns out that many problems become solvable that are impossible to solve by feedforward networks.

But now, back to this example, let’s look at the problem of ten-bit parity. What you have as an input pattern is a bit string of length ten, so it’s like 1, 0, 0, 1, 1, 0, 1 and so on. There are 210 different patterns like that, there are 1024 different patterns; and in fact, you can train a feedforward network to classify correctly all these patterns. So the feedforward network has ten input units and a hidden layer (one hidden layer is enough, we know that), which also has maybe ten hidden units, and then there is one single output unit which says ‘yes’, 1.0, in case the number of on bits is even or the opposite otherwise if the number of on bits is odd. You need to present a lot of training examples to learn this mapping.

The same mapping can be learned much more easily by a very tiny small recurrent network that has only five connections, not hundreds of connections like the networks that I just described.

There’s one single input unit where bits are read one bit at a time: 1, 0, 1, 1 and so on. There’s one additional unit which has a bias input which is always on, and then there is one single hidden unit which has a connection to itself, and there’s one output unit from the hidden unit with a connection from the hidden unit and from the bias unit.

And now you’ve got five weights in the system, and you have to find a program that makes this recurrent network solve the parity problem, which actually is very simple because the network has to learn to implement the flip-flop whenever a new input bit comes in: if it’s one, then the internal state of the memory unit should be flipped. If it was one, then it should become zero, and otherwise, if it was zero or close to zero then it should become close to one. This very simple program can be implemented by five connections, and you can use the most stupid learning algorithm ever to train this network: you just randomly initialize all these connections with weights selected between -10 and 10 and see what happens. Does it work on a very limited training set of three examples like, for example, a bit string of size seven, another bit string of size 15, another one of size 21? If it works, then you can be almost sure that it’s also going to work on all other bit strings of unlimited length. We performed that experiment many decades ago.

So a tiny network, a tiny recurrent network, can do everything that a much bigger feedforward network can do and more because the feedforward network is completely going to fail as soon as the input strings, the input data has more than 10 bits: 11 bits or something, because it was never trained on that and it can’t even process 11 bits, while this recurrent network, this very simple elegant network has the natural solution to the parity problem which works for arbitrary sequences. Just to illustrate the basic power of recurrent networks as opposed to feedforward networks.

Back to our main topic of deep learning: in the next two lectures, we will first focus on deep feedforward neural networks, which are now widely used, despite their limitations, in all kinds of applications ranging from computer vision to stock market prediction and whatever, and then in the next part of the lecture we are going to focus on the recurrent networks whose advantages I just explained, and there it will turn out, yes, these recurrent networks are very powerful, but you have to do extra little things to make them work. On the original recurrent networks, they didn’t work so well, but there’s something that is called long short-term memory that works really well, and that’s now on your smartphone, and it’s doing the speech recognition and many other AI applications for billions of people billions of times a day.

Philosopher David Edmonds on relationship between moral philosophy and robotics, artificial intelligence and e...

Neurophysiologist Mikhail Burtsev discusses attempts to model the human brain, the development of logical syst...

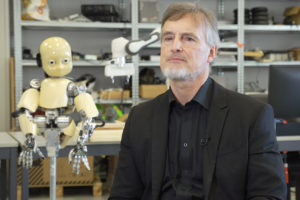

AI specialist Jürgen Schmidhuber on the first deep networks, backpropagation and whether you can train a netwo...