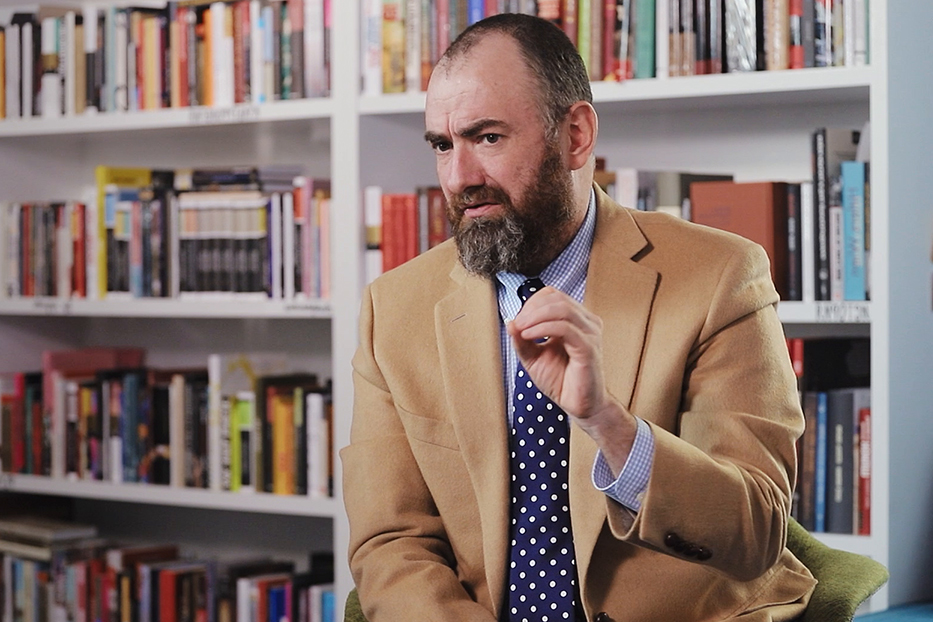

Neural Networks

Computer scientist Erol Gelenbe on history of neural networks research, Lapicque equation and reinforcement learning

videos | June 12, 2019

We can think of neural networks as being a very new topic. In fact, it isn’t: it’s a fairly old one. In fact the first neural network research goes back to the end of the 19th century. There are several examples of this work and there were the first Nobel Prizes in this area which were given to an Italian Golgi and to a Spanish person Ramón y Cajal. These Nobel prizes, or in fact it was one Nobel prize, were given in physiology and medicine to two individuals for complementary work on neural networks. The Spanish man had done a lot of work on the individual neuron; the Italian Golgi who was a professor at the University of Pavia (Ramon y Cajal was in Madrid) – the Italian had looked at the network structure for the first time: he had discovered techniques to color natural brain tissue so that you could see under the microscope the details of the underlying neural networks. So there was a very interesting complementary piece of work.

So there was this work but there was also work in Paris by a man called Lapicque. Today we still use Lapicque’s equation concerning neural networks. His study was related to physiology but it was also related to external observations. He’s the first person to indicate that the internal functioning of a neuron and the internal functioning of neurons between each other, the communication, is based on spikes. ‘Spikes’ means that these are very short signals with a rise in electrical potential and a fall, so whatever happens up here, in our brains, is based on spikes going between neurons. This all goes back over a hundred and twenty years, even a little bit more.

This is extremely interesting because for a long time this field remained quiet: there was a lot of work going on but there was limited interest until the 1940s. In the 1940s we have the work of McCulloch and Pitts. These two people offered a simplified model of how a network of neurons might work. The simplification was such that they had to remove the idea of the presence of spikes and they had a representation which was essentially deterministic, that is non-random, and it was based on analog behavior of circuits. If you look at a book from late 1940s called ‘Automata studies’ published by the Institute for Advanced Studies at Princeton you will see the paper of McCulloch and Pitts and you’ll see also another very interesting paper by someone called Kleene. Kleene was, if you wish, at the foundation of the mathematics of programming languages: he developed something called ‘regular expressions’. However, his motivation, if you look at the title of the paper, was following: he was thinking that he was developing a calculus for the representation of communication in nerve nets, this is what he said he was doing. In fact, what he ended up doing historically was a calculus for representing mathematical languages.

The spiking activity of the end of the 19th century, beginning of the 20th century with a network activity of Golgi and Ramón y Cajal for the detailed study of the single cell and this work fifty years later approximately done by McCulloch and Pitts and Kleene – these are the foundations of what we call the field of neural networks.

In the field of neural networks there is another very important aspect: you would always wonder why we can use them for something useful, because we see that there are lots of interesting applications. The basis of that is, again, two mathematical theorems. Through the theorems it was shown in the early 80s and late 80s that neural networks can be used as approximators for continues and bounded functions. Continues we have an intuitive sense of what it means, although it’s a mathematical term, and bounded means that if you have a finite input, the output will remain finite. So you’re talking about continuous and bounded functions, and neural networks have been proved to approximate continuous and bounded functions. There are two pieces of work, one of them is mine from the late 80s showing that a random neural networks are approximators for continuous and bounded functions.

Those are the basis for why we can use them so commonly. If you look at a lot of the research that has been going on for the last ten years which has brought back neural networks into fashion and which has made them practical what you see is that they are exploiting this approximation property of neural networks. It is what we expect them to do. We take a lot of data, based on this data we feed the data to the neural net and we adjust its parameters so that it approximates the state as well as possible so that later if similar data is shown then this neural network will give a correct or similar answer.

So there are two phases: the learning phase and the usage phase. The learning phase is what I’ve described, so you have all this data and you are changing the parameters of the circuit, or of the mathematical algorithm that represents the circuit: you’re changing the parameters so that the data is fitted as closely as possible. When you use it then you’re doing something different: you have a trained network that has learnt already, and you’re giving it an instance of the data and it’s giving you an answer which is very close to the previous things that it has learned. Why? Because it is a very good approximator of continuous and bounded functions.

So this is really the functioning of neural networks. Recent years have seen the emergence of something we call deep learning; in the previous period people used gradient descent learning which was based on finding efficient algorithms for determining local minima of the error functions between the input data and the required output. More recently this has been superseded but what one calls deep learning where you’re using multiple repeated levels of non-linear optimizations so that you can adjust the parameters of the circuit as well as possible to the data. Deep learning on the one hand is something, shall we say, new compared to backpropagation learning before, but on the other hand it’s something that is enabled by the much faster power, much greater power of the computing devices we use today so we can do a lot of optimization in a very short time.

Another form of learning is important to understand and that’s called reinforcement learning. A lot of the results concerning games that are publicized as being a big success are based on a technique which is also quite old, which goes back to psychologists and which is called ‘reinforcement learning’. It was redeveloped thirty years ago by a computer scientist called Sutton and before that there was the work of psychologists describing reinforcement learning that had been observed in human beings and in animals. Reinforcement learning is simply that you do something and then based on your success you modify your parameters, your ways of doing the thing, so that you hope to become more successful next time. You repeat this, so you always reinforce the options that you have taken which increase your success and you weaken the options that you have taken that will reduce your success with tasks or with different activities or with, for instance, tracking some objects flying the sky that you’re trying to follow and you can use reinforcement learning to track it to track get better and better and better by changing your actions to be able to follow it more closely.

So in a way if you think of a game where you’re actioning sequence of events, here you’re trying to change your rules of behavior so that at each step you’re doing better than before and you’re saying – well, I did this and it wasn’t very successful, I did that and it was successful, so I will do that because that is better than this. This is the process known as reinforcement learning. Reinforcement learning itself can be detailed, can be supported by other methods such as, for instance, deep learning or gradient learning and so on. So they can be accessories to that.

In conclusion, I think on the one hand we’re dealing with a very old subject: well-established, with lots of links with neurophysiology now, very connected to brain studies. On the other hand we are dealing with technologies which have become possible because of the dramatic increase in power in computers. We know, on the one hand, that these objects are very good approximators for continuous and bounded functions and that’s why we use them; on the other hand, we know that a lot of things we ask them to do are undecidable, that is even the most powerful computer would not be able to guarantee an answer to these questions and yet we use these devices in a certain heuristic manner.

In the field of neural networks there are two most important open questions. One is reproducibility: we know that even small unexpected perturbations of the data that’s presented – for instance, you are trying to learn a face and the face is presented not in the usual manner but perhaps at an angle, or with special shadows, or in an unusual position – such changes can strongly influence the outcome. So what are the properties of the data that we are using to train neural networks that will avoid these instabilities in the ability of the neural networks to take correct decisions? So this is a very important problem which arises all the time.

The second important question is what is the actual class of problems other than the obvious ones which are continuous and bounded functions that we can address with neural networks. Outside of this world (and there are so many other problems that we have to address!), outside of these well-understood bounds what are the class of problems in which neural networks can be used to be reliably? This question is way open.