Brain Language Research

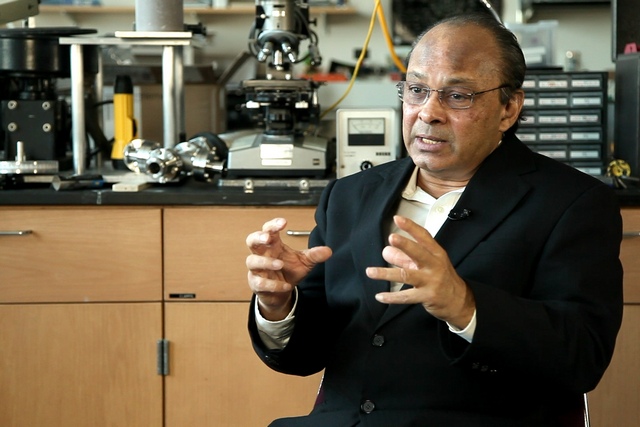

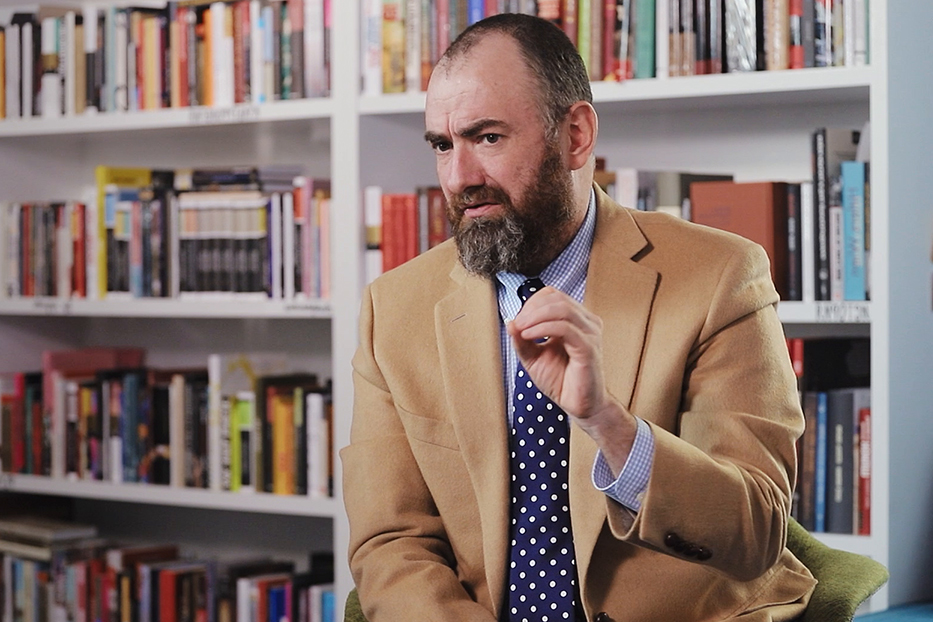

Neuroscientist Friedemann Pulvermüller on brain-language relations, brain lesions, and simulation of cognitive processes

videos | November 26, 2017

I would like to say a few words about the brain basis of language. From patients and neurological studies we know that some parts of the brain are particularly important for language – actually, the whole new part of the brain, the neocortex, which is especially large in humans, is important for language. However, there are specific parts of that big structure – the neocortex – which are of particular relevance for language. One of them is in the frontal cortex, the second one is in the temporal cortex or behind the left ear. It’s usually the left hemisphere which is the most important for language – in the most of us, at least.

Why is that? We have models today that would actually account for that – at least in part. We know that the brain has certain neuroanatomical structure, that the articulators of the mouth are controlled by a region called ‘motor cortex’ that is close to the frontal language region. The cables from our ears that tell the brain about acoustic signals we process, reach areas in the temporal cortex. These areas are close to the regions that would also be of particular relevance for language.

Why then would these language regions take their home close to the inputs and outputs of language? The explanation can be provided by neurobiological models where you produce neural networks – artificial brain-like structures, where we have a motor cortex, the frontal language region, the auditory cortex, the sound processing areas and the areas around them, – and then present this network with information that we would produce or receive during language acquisition, early stages of language learning. If I play a baby or an early language learner, I may first say senseless syllables like ‘ba ba ba’ or single words like ‘bat’ or ‘car’. Those words would, of course, the movements of my mouth that produce them would require some motor activation. At the same time, there is activation in the auditory system as I hear myself speaking. Therefore, at two very distant ends of the brain, in a motor cortex and in the auditory cortex, in the front and in the back, we have correlated activation.

I mentioned the term ‘correlation’. It is something very important, as our brain is particularly good at mapping correlation. It has learning rules that drive the strength between nerve cells according to the correlation of their firing. If we have this correlation at different ends of our brain when we speak, there will be strengthening of the links between these nerve cells. As there are no actually strong connections between the motor cortex and the auditory cortex, the activation needs to take detours around regions in the other part of the frontal cortex close by parts to the temporal cortex. Those now happen to be linked with each other.

That’s a particularly important feature of the human brain. It has only recently been discovered that especially in the left hemisphere there are fundamentally strong connections between frontal language and the temporal language area. These connections lead information back and forth between the two. For mapping the correlation between motor and auditory neural activation the human brain is especially good and well-developed – it’s cut out for it, if you wish. The idea that I’m trying to bring forward is that during our early language acquisition, we would build neuronal assemblies circuits of strongly connected nerve cells, one for every word and maybe larger constructions. That is why we build vocabulary of words and longer junks of language which then become consolidated over time and form the building blocks of the language.

So, we’re in the process of slow but steady improvement of our understanding of brain-language relations. I have only talked about words – this is a very simple topic relative to complex syntax and the meaning of words and larger constructions. Finally, there are social interactions in which language plays a big role. However, these biological mechanisms kick in at a very basic level, in the higher, more complex level we have similar situations. The language machinery can slowly but steadily be explained a little better using neurocomputational work and looking at the patients in much detail. We could elaborate to this topic when we use neuroimaging and modern techniques to stimulate parts of the brain.

One possibility is to look at the patients with language disturbances and describe their problems. They can have trouble naming objects (saying ‘that’s a glass’), understanding problems (if you show them a glass and a hand, and ask which of them is a hand, they may point at the wrong object). One important part is that when we do neuroimaging – we use fMRI, EEG, MEG and look at the brain activation patterns elicited by the speech sounds, words, little constructions, complex sentences or whole interactions between communication partners, when I ask somebody to give me something and he or she responds. There are specific brain activation patterns we can map.

A different strategy is to play patients with healthy people. There are methods to slightly affect the functionality of the brain by magnetic stimulation. By that we can produce mini lesions or mini activations much more focally than big brain lesions. Then we can address the question of whether a smaller part of the brain is causally involved in language understanding, for example.

We now know a little bit more about brain-language relationships, and we can even address the question of why the language regions are placed in the brain where they are. We can use neural network simulations mimicking the brain to provide explanations as to why certain aphasias occur after specific lesions.